Introduction

Microsoft released Security Copilot worldwide on April 1, 2024. This service provides a natural language, generative AI assistant for Security Operation Center (SOC) analysts.

Security Copilot is a generative AI-powered chat assistant add-on designed for various Microsoft Security tools. It enables security analysts to converse with an AI assistant, share conversations, and use generative AI to summarize incidents, decode scripts, translate languages, create queries, automate tasks, evaluate threat intelligence, and more.

Security Copilot empowers both junior and senior security analysts. Junior analysts can now perform tasks and follow instructions previously expected only for senior employees. Senior analysts can enhance their efficiency and capabilities. This improvement makes security analysts more effective and reduces reliance on human expertise as the primary determinant of incident response success.

The following article will describe Security Copilot, related topics, and alternative Microsoft solutions.

What is a Chatbot?

Microsoft introduced chatbot services in 2016 to make web searches more conversational. These chatbots were designed to emulate chatting or texting with a friend or coworker, managing simple interactions within a chat window.

At their core, chatbots function as question-and-answer services. Early versions had lists of frequently asked questions and corresponding answers, often hard-coded into the system as a decision tree. In predictable scenarios, such as taking a pizza order, anticipating user queries was straightforward. However, the main challenge for traditional chatbots was accurately predicting users’ questions.

With advancements in artificial intelligence and the expansion of Q&A databases, modern chatbots can now respond to support, purchasing, and sales conversations on various websites and applications. Despite these improvements, traditional chatbots still struggle to engage in truly conversational interactions and are limited in the range of questions and answers they can effectively manage. Even voice-enabled chat services found on many phones and home assistance devices lack true conversational capabilities until recently.

LLMs are where AI meets Search

Large Language Models (LLMs) gained mainstream awareness in 2023, driven by OpenAI’s GPT (Generative Pre-trained Transformer), known as ChatGPT. Prominent LLMs include Google’s Bard (now called Gemini), Meta’s LLaMA, Amazon’s Bedrock, Elon Musk’s Grok, as well as BERT, XLNet, CTRL, and many others.

Search engines have been indispensable for more than 20 years. Users perform searches using keywords or phrases, and a list of related websites is displayed. The quality of the search experience depends on the accuracy of results, response time, and browser aesthetics. However, advertisements and SEO tactics often boost websites to the top of the list, making it necessary for users to sift through multiple sites to find answers or products.

LLMs are trained on vast amounts of public and non-public data, enabling them to provide direct answers rather than just website references. This is akin to asking a scholar a question rather than a librarian; the scholar attempts to answer your question directly, while the librarian directs you to a shelf of books.

LLMs are not limited to answering questions. They can translate languages, perform mathematical calculations, make comparisons, understand code, infer meaning from vague or misspelled queries, and create new works of art, music, written text, code, and even video.

What is Microsoft Copilot?

Copilot is Microsoft’s term for an AI-driven chatbot or assistant. While many Copilots are supported by OpenAI, they are not limited to any specific AI provider or Microsoft partner. Since 2023, Microsoft began systematically integrating Copilots into every Microsoft service. Today, you can find Copilot in the Bing browser, Office 365, Word, PowerPoint, Teams, GitHub, Visual Studio, and even Microsoft Paint for AI image creation. One of the most profound improvements has been the summarization of remote Teams meeting transcripts, allowing faster review and recall from recorded meetings.

Advantages:

- Consistent Experience: Copilots provide uniform experiences across the Microsoft ecosystem.

- Conversational Abilities: Capable of answering questions and creating content through conversational chatbots.

- Ease of Use: No need for AI model training, infrastructure, or complex configurations.

- Multifunctional: Supports language translation, grammar and spell check, and image generation.

- Reduced Reliance on Stock Images: Lowers the risk of copyright infringement.

- Improved Productivity: Saves time on searching and writing tasks.

- Security and Privacy: Empowered by Microsoft’s robust security and privacy measures.

- Grounded on User Data: Capable of seeing all the data visible to the user creating a powerful personal assistant service.

What is an Agent?

If 2023 and 2024 were the years of generative AI, the coming years will be defined by AI agents. While tools like Microsoft Copilot, ChatGPT, and Gemini have been valuable assistants by answering questions, proofreading, generating images and videos, and producing original written content, agents take AI a step further by acting autonomously on behalf of users.

Agents are AI systems capable of performing tasks in both digital and real-world environments. Unlike generative AI, which primarily assists with information processing and content creation, AI agents can execute actions based on user intent or contextual understanding. For example, while generative AI can help plan your next vacation by suggesting destinations and itineraries, an AI agent could go further by booking flights, reserving hotels, and adjusting plans dynamically based on real-time data, such as flight delays or weather changes.

Agents will drive the next wave of automation by handling complex, multi-step tasks such as responding to emails, completing workflows, managing schedules, ordering supplies, initiating preemptive maintenance, and troubleshooting IT issues. As these systems evolve, they will integrate more deeply with enterprise software, personal devices, and IoT ecosystems, making them indispensable tools for productivity and decision-making.

What are the Risks?

There are four primary risks or concerns that are cited when discussing an LLM solution. The following section will discuss privacy, hallucinations, bias, and copyright.

Privacy

Privacy is a significant concern when prompts and documents shared with a Large Language Model (LLM) could contain sensitive company or customer information. These can range from simple queries (prompts) to entire documents or databases uploaded for translation, evaluation, or grounding. There are two main risks: first, potentially confidential data is being shared with a third party; second, this data may be exposed in subsequent prompts if used to train the AI. In theory, any data shared with an LLM provider could be stored, mined, sold, leaked, or used to further train the AI.

This situation is comparable to hiring a consultant under a Non-Disclosure Agreement (NDA). The consultant learns confidential information about your organization and is legally bound to protect that data. However, there is always a risk that trusting the wrong consultant could lead to a data leak. The consultant could inadvertently disclose information in unrelated conversations, store information in an unsecured location, or use that knowledge elsewhere.

Privacy issues with LLMs can be addressed through various measures:

- Legislation: Enacting laws to protect data privacy.

- Agreements with Providers: Establishing clear terms and conditions with the LLM provider.

- Provider Promises: Relying on provider assurances to uphold privacy standards.

- Monitoring and Pre-filtering: Implementing systems to monitor and filter LLM prompts.

- Employee Training: Educating employees about the risks and best practices for using LLMs.

- Custom LLM Solutions: Developing bespoke LLM solutions tailored to specific privacy needs.

- Premium LLM Offerings: Opting for premium services that offer enhanced privacy controls.

Hallucinations

Accuracy of responses is another common concern with Large Language Models (LLMs). The term “hallucination” refers to instances where LLMs generate incorrect answers. These models are limited by the data they were trained on, which may exclude certain sources, topics, and historical knowledge. When allowed to respond creatively, LLMs may fill in gaps, leading to inaccuracies that can be critical in contexts such as medical or legal advice. Also, at times LLMs produce obviously incorrect responses with no explanation.

All responses from an LLM are best guesses based on probability. As humans, we know how challenging it can be to define the truth of any answer. LLM services often incorporate systems for scoring the potential accuracy of multiple responses. Users are presented with the highest-rated response, while responses scored too low are not shown. For example, a model may only display answers that meet a required confidence level. Though there are cases where inaccuracy cannot be explained by the input data.

This situation is comparable to a teacher who hesitates to admit when they do not know an answer. Instead of admitting their lack of knowledge, they might provide an answer based on logical reasoning and experience. While these made-up answers can sometimes be close to the truth, there is a risk of disseminating inaccurate information.

Hallucinations are also key to LLM creativity. We want LLMs to be creative, to generate novel content, and to describe common things in new ways. The truth becomes a balance between creativity, human interpretation, and factual accuracy. The trick lies in training LLMs to adjust creativity levels depending on the context. We want creativity when telling a story or creating an image but less creativity when asking for information on medical treatments or dosages. LLMs designed for factual responses can allow far less creativity.

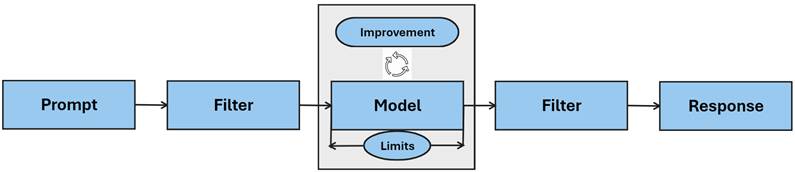

LLMs improve over time, increasing their accuracy. This improvement is driven by user feedback and advancements in model design. Designers are enhancing guardrails to prevent inaccurate responses. User prompts can be filtered and refined before reaching the LLM, the LLM itself can be improved to generate better responses, and the output can be reviewed for problematic responses before being shown to the user. Scoring, ranking, and limits on accuracy and creativity can be adjusted. Additionally, it is becoming more common for LLMs to warn and educate users about the potential inaccuracies of their responses and, in some cases, to provide references for factual verification.

LLM Bias

LLMs reflect the data used to train them. If this training data contains bias or offensive material, the model’s responses can reflect these undesirable elements. What is considered offensive is complex and subjective, varying across individuals and cultures. Determining what is acceptable is further complicated on a global scale, where culture, politics, law, religion, ethics, history, age, ethnicity, gender, and more come into play. Additionally, the value of free speech adds another layer of complexity.

This situation can be compared to a magic mirror that reflects all our best and worst characteristics, limited only by the quality of the glass. Every desirable and undesirable trait is on full display, and this harsh depiction can be difficult for many to bear. For the sake of our pride and self-image, we need a way to soften the edges of our less desirable traits. These corrections must be balanced to respect all people. A flaw in the mirror could make everyone taller, thinner, or reduce the variation of skin tones. Removing bias requires a careful balancing act of truth while compensating for imperfections in both the mirror and our own desires.

The solution to bias in LLMs partially lies in the data input. Biased data leads to biased results; garbage in, garbage out. The broader the data, incorporating multiple points of view, the more balanced the responses. However, we will see some divergence, much like how news networks cater to different perspectives, where users choose an LLM aligned with their worldview. Addressing bias also involves human intervention. Using human judgment, user feedback, and research to filter out inputs that tend to produce undesirable responses and applying content filters (guardrails) to the output. Both input and output filters could involve additional LLMs, relying on layers of AI to mitigate bias.

Copyright

The last potential risk that we will discuss involves copyright. The creative capabilities of LLMs enable users to generate words and images that are effectively the property of the user or the provider. One advantage of image creation LLMs, such as OpenAI’s DALL-E, is that the generated images are presumed to be free from copyright. However, the LLM derives its knowledge from existing sources. Among the millions of prompts and responses, there will inevitably be phrases, images, music, and videos that may inadvertently plagiarize existing works.

This can be compared to any human who has read hundreds of books, listened to thousands of songs, watched countless videos, and observed many works of art. All human creations are influenced by other works, blurring the line between creativity and imitation. The risk with LLMs arises when their output directly threatens the livelihood of individuals like artists, musicians, actors, and writers. The debate and litigation on this topic may never end.

The solution, if there is one, will come from legislation and precedents set by ongoing litigation. Like the issues described above, the solution will rely on verifiable data sources combined with model tuning, input controls, output controls, and user education. Providers will be mandated by law or societal pressure to disclose and compensate identified content sources where possible. Although the risk of copyright infringement using an LLM is less than using the Internet to acquire media for marketing and presentation, it remains a significant concern.

In practical terms, relying on a well-known LLM source for commercial use is safer than creating your own LLM model. The legal risk appears to fall more squarely on the provider or trainer (though I am no lawyer). Ensuring that any data you use for grounding is legally yours and training employees to avoid using obviously derivative images or media is crucial. Despite these controls, users often attempt to trick these LLMs into creating derivative content. Microsoft Copilot, ChatGPT, and other LLMs have mature controls to help prevent the use or creation of copyrighted materials, but they can still be tricked.

What is Grounding?

LLMs are trained through mathematical probability and word associations. Data is fed into the model using text or other media like images, associations are calculated, and the results are evaluated for accuracy. A feedback loop helps improve the accuracy of these results, which depend on the provided data, evaluation methods, and settings that control allowed accuracy and creativity.

An LLM is pre-trained on a broad dataset. Grounding occurs when you augment the LLM with an additional, specific, preferred dataset. The LLM can then be instructed to respond only using specific data or to prioritize responses based on your data before providing a general answer. Grounding can be presented as a file upload, website URL option, or sign-on to services like OneDrive. Grounding can be part of the prompt, for example stating, “limit responses to news articles from 2023.” Grounding can also involve backend calls to a media library, database, or other data source by an LLM-powered application.

You may encounter the term Retrieval Augmented Generation or RAG in association with grounding.

Grounding can be compared to hiring an expert chef who has worked in restaurants worldwide. You hire this pre-trained chef to work in your new Italian restaurant, instructing them to cook only from the menu you provide. You are confident that the chef can make any dish on the menu without additional guidance. Given the chef’s vast experience, you also have the option to allow a certain level of creativity with the menu or to accommodate custom requests.

The LLM can be said to be pre-trained on a broad dataset based on the intended use. Grounding is when you provide the LLM with a preferred dataset. The LLM can be instructed to answer only from your data using the trained model or to prioritize responses based on your data before providing a general response.

For example, you upload your proprietary knowledge base and technical resolution documentation to ground an LLM to assist your computer helpdesk team in answering support calls. The LLM will provide the helpdesk employees with answers grounded in your own instructions and, if allowed, can also provide general responses when the grounded data is insufficient.

To be clear, grounding does not re-train the model. Grounding provides context. Back to the chef example, limiting the chef to an Italian menu does not change the chef’s skills. Those skills are ingrained. Rather, grounding tells our chef to use their skills to make only Italian meals from our menu. If our menu has meals that require unique skills, the chef cannot learn new skills by simply reading our menu.

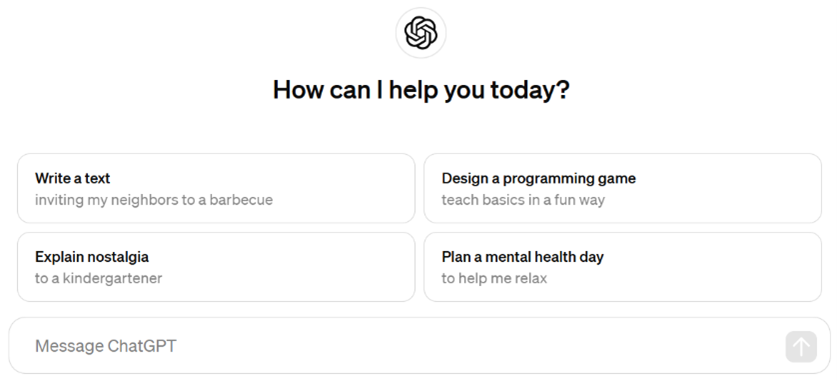

Introduction to ChatGPT from OpenAI

ChatGPT from OpenAI was released as a demo on November 30, 2022. OpenAI had previously made the Generative Pretrained Transformer (GPT) model available only as an API for developers. Microsoft has been a major investor in OpenAI since 2019.

ChatGPT brought the power of LLMs to the masses with a free web service, alongside the ongoing API options. The website https://chatgpt.com grew rapidly in popularity and launched a premium service in February 2023. Bing Chat from Microsoft, an extension of ChatGPT, was also released in February 2023, followed by a rebranding and premium service called Copilot Pro in January 2024.

The premium version provides users with access to GPT-4.0 and the image creation service DALL-E. Anticipation is growing for GPT-5.0, expected sometime in 2025, with early announcements related to video generation, voice and video chat, and human avatar capabilities being wildly popular. Both services also offer various enterprise options.

In May 2024, OpenAI announced that the free version of ChatGPT will now run GPT-4.0, and the premium version will get an update called GPT-4o. This includes new plugins for premium users to connect ChatGPT to personal OneDrive and Google Drive accounts for a more personalized experience.

Premium = Privacy

The primary distinction between free and premium user licensing of ChatGPT and Copilot Pro is privacy; both premium services block user data from being used as training data for the LLM. ChatGPT Enterprise pricing details are not publicly available. Microsoft offers a stand-alone enterprise license, but more importantly, Copilot for Microsoft 365 is included with all enterprise user licenses (E3-E5, A3-A5, etc.). This means most enterprise M365 users have access to enterprise Copilot today, not including other services like Azure OpenAI and Security Copilot.

What is a GPT?

One unique option for ChatGPT users, starting with the ChatGPT Plus plan, is access to a service referred to as “GPTs.” Premium users can create and share custom versions of ChatGPT. These custom versions have a unique name, logo, description, URL, and can be grounded using public websites and uploaded documents. Users also have some limited control over the personality of these GPTs. There is a marketplace with a rating system and usage statistics, with an unfulfilled promise of a gig economy for monetizing GPTs.

It does not appear that these custom GPTs are available as an API endpoint today, only as a website. Likely, there will be an API or embedding option for developers in the future. These GPTs are incredibly easy to create; the entire process is a prompted conversation with no coding required. Even a young child could create a GPT in minutes. GPT controls and grounding options are limited, and only premium users can create GPTs. The potential for these new LLM bots seems limitless once they open the floodgates, starting with a recent announcement that GPTs can now be shared with free users. Microsoft does have a comparable solution called Azure OpenAI, which we will cover in an upcoming section.

Side Note: It is a common misconception that prompts are used directly to train the LLM. These models are not actively learning from your prompts or shared documents in near-real-time; at least not yet. It takes considerable time and effort to retrain a model. Though this data could be used for auditing, research, or stored for use in a future training round if allowed, the process is far less immediate than you may assume today.

Microsoft Copilot

The growing number of Copilot names related to Microsoft AI can be confusing. It is important to understand the distinct differences as you explore these technologies. OpenAI traditionally provides ungrounded, general AI services, though some grounding options are available.

OpenAI Services:

- ChatGPT: Available in various versions (GPT-3.5, GPT-4.0, GPT-4o), plus prior versions and APIs.

- Custom GPTs: Allow grounding on public websites and a limited set of uploaded documents.

- DALL-E: Image creation.

- Codex: Leading to GitHub Copilot.

- Whisper: Speech-to-text and text-to-speech.

- Sora: Text-to-video.

- Jukebox: Music generation.

- SearchGPT: A new prototype for AI enhanced web search.

Microsoft Copilot:

Free Version: Embedded in the Bing browser, offering ungrounded, general LLM service like free ChatGPT.

Copilot Pro: Premium service available to home users for a monthly fee, with enterprise options for Microsoft 365 users. Copilot Pro is grounded on personal and company data accessible to the user (on behalf of the user), enhancing personal assistant capabilities. This includes DALL-E image creation inside of the Bing browser with a unique ability to upload sample images.

Copilot cannot access data beyond what the user can access or what is expressly blocked (called “on behalf of” access). This reinforces the importance of the ‘least privilege’ doctrine and helps users better manage their data.

Microsoft Copilot Studio is a new service allowing administrators to create custom Copilots. Much like custom GPTs from Open AI or Azure Open AI. These Copilot GPTs can be hosted in a stand-alone web-app or in Microsoft Teams (for internal and external users). Created with a simple, prompt-based interface, these chatbots are an extension of Copilot, grounded on specific data and customized to your liking. Operating as a service principal user. Unlike Azure OpenAI that is hosted in Azure, these Copilots are an extension of Power Platform (a service for designing custom apps). This can be an add-on to Power Platform licensing, or a stand-alone plan based on Requests per Minute (RPS).

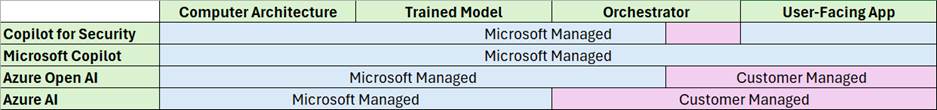

Azure AI and Azure OpenAI:

Azure AI: Allows developers to create AI solutions using Microsoft Azure cloud services, based on proprietary AI models and partnerships.

Azure OpenAI: A subset of Azure AI services based on a partnership with OpenAI, making it easier for developers and enterprise customers to host OpenAI GPT-style services on Microsoft Azure.

Both Azure AI and Azure OpenAI use pre-trained AI or LLM models, which can be grounded on various data sources like databases, image libraries, and proprietary documents.

Security Copilot: Specialized AI or LLMs trained specifically for security investigations and research. It is grounded on an organizations private security data and embedded inside of various Microsoft security web portals.

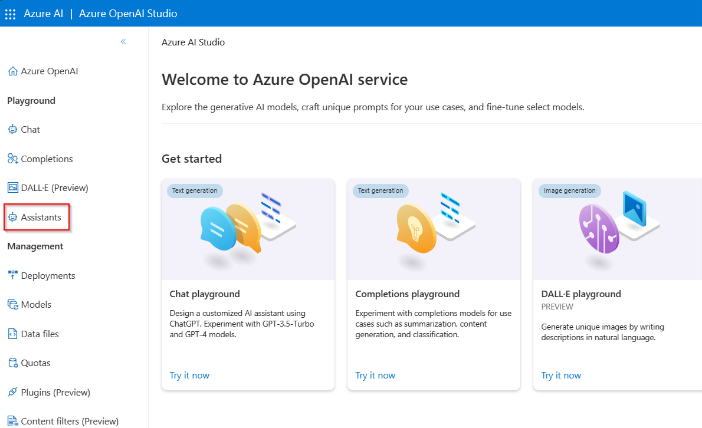

Azure AI and Azure OpenAI

Microsoft has been providing AI services in Azure since 2019, starting with Cognitive Services focused on advanced search capabilities. Azure offers a wide variety of AI services, including machine learning, vision (image), face recognition, OCR (image-to-text), translation, video indexing, bot services, sentiment analysis (moderation), text-to-voice, and text-to-avatar.

Like the early days of OpenAI, these services operate as APIs, enabling developers to add AI capabilities to applications. However, this approach has mostly been out of reach for the average user.

Azure OpenAI (AOAI):

Azure OpenAI provides an enterprise equivalent to the OpenAI custom GPT service. Although this service remains out of reach for the average Microsoft 365 user, Azure administrators now have access to an increasing number of low-code/no-code options for creating LLM services hosted in Azure. The Azure OpenAI service provides a more advanced option for creating and hosting custom LLMs.

Azure OpenAI offers more control than OpenAI GPTs, including detailed system prompts (the operating guidelines of the LLM) and settings to manage memory, creativity, accuracy, and more. Designers can provide more varied grounding options and have greater overall control. The published app is available in a playground and testing environment for evaluation, in addition to being accessible as an API. Azure OpenAI also integrates with many of the other Azure AI services mentioned above.

Azure AI and Azure OpenAI are priced based on token usage. A token is equivalent to four characters, and you can estimate the number of tokens in a phrase by multiplying the number of words by 75% (e.g., 100 words is approximately 75 tokens). One distinction among LLM models is the number of tokens allowed in a request and the token limit. Customers pay based on the token volume rather than per user.

What is Security Copilot?

Security Copilot is a grounded enterprise LLM designed to assist Security Operation Center (SOC) employees. Security Copilot is tailored to enhance cybersecurity operations and is available in various Azure Security portals including the Microsoft Defender (XDR) portal (www.security.microsoft.com), Azure Portal, Intune, and a stand-alone experience at https://securitycopilot.microsoft.com.

Key Features:

Pre-trained Expertise: Security Copilot is pre-trained for security-related prompts. It can produce KQL queries, decode scripts, explain code, translate 25 languages, and provide guided responses, impact analysis, and incident summarization.

Generative AI Embedding: Security Copilot is embedded in the various Microsoft security web portals used by SOC teams including the Defender XDR portal, Azure portal, Intune and more.

Secure Information Handling: Unlike public LLMs, Security Copilot is designed to safeguard data related to security incidents. By default, it does not share data with Microsoft for training, reducing the risk of exposing confidential information.

Integration with Security Data Sources: Security Copilot uses plugins to connect to various security data sources (both Microsoft and over twenty 3rd party solutions like VirusTotal), grounding itself on your data. This allows your SOC team to ask natural language questions, decode and translate scripts, get tailored guidance, and privately summarize and share information internally. Including a unique plugin for KQL datastores.

You may hear the term Skills used interchangeably with Plugins, though a skill is a plugin capability.

Azure Resource: Security Copilot is deployed as an Azure resource, supported in all major geographies across North and South America, Europe, and Asia. It resides in a resource group within one of your Azure subscriptions, allowing you to control access using Azure role-based access controls and defines the processing location for your data. It is simple to set up and manage.

Security Copilot API for Automation: The Security Copilot API and Azure Logic App Connector make it easy to call Security Copilot services from a Microsoft Sentinel playbook or other automation services.

MDTI Included: Microsoft Defender for Threat Intelligence (MDTI), following the 2021 acquisition of RiskIQ, includes Indicator of Compromise (IOC) discovery and threat actor reports called intel profiles.

User Interaction:

Promptbooks and Freeform Conversations: Security Copilot users receive suggestions and curated conversations called promptbooks. They can also run freeform chat on the standalone portal.

Sessions: Conversations with CFS, called sessions, are supported in 25 languages. These sessions can be saved, resumed, shared, and can be used to create custom promptbooks for future use.

Advanced Automation: Security Copilot can use automated response tools native to cloud security solutions like Defender for Endpoint. It allows for automated responses using Azure Logic Apps, such as running an antivirus scan, isolating a device, locking a user account, and more.

Billing: Security Copilot billing is based on Security Compute Units (SCUs). Capacity reservations can be purchased in units of 1-100 SCUs, with a minimum of three SCUs recommended. Users cannot exceed their allotted hourly SCUs, ensuring predictable pricing. Security Copilot includes a usage dashboard, allowing admins to adjust SCUs as needed. The cost of Security Copilot can range from over $100k annually to potentially millions, depending on usage and scale.

Summary:

In summary, Security Copilot provides a robust, private, secure, and integrated solution for SOC teams, enhancing their ability to manage and respond to cybersecurity threats effectively. The key advantages of Security Copilot are its status as a private LLM service, embedded within your Microsoft security portals, grounded on your data, with easy-to-use controls to manage access and cost, supporting integration with a wide range of Microsoft and third-party security services.

Why might Microsoft customers consider an alternative solution?

Price is one of the main reasons customers might seek alternative solutions. For many, Security Copilot will be too expensive under the current pricing.

Other Considerations

- Limited Adoption of Microsoft Security Services: Some organizations may not be heavily invested in Defender XDR or may have limited adoption of Microsoft security services.

- Lack of Support for Certain Environments: Azure Government and other specific enclaves are not yet supported.

- Preference for Custom Solutions: Some organizations may prefer or have a higher degree of trust in a custom solution tailored to their specific needs.

- Subsidiary Considerations: If you are a subsidiary of a larger organization that owns the Defender XDR portal for your organization, you might face integration and access challenges.

- Augmenting Security Copilot: Customers may use some of the solutions described below to augment Security Copilot.

- Steppingstone or Temporary Fix: You might also consider these solutions as a temporary to Security Copilot, when waiting on funding, availability, or other blockers.

Alternative Solutions:

For those that require a temporary or permanent alternative to Security Copilot due to cost, service availability, preference, or other factors; there are a few Microsoft provided options. Though none of these alternatives can fully replace the robust capabilities of Security Copilot, you can achieve a degree of generative AI capabilities.

These available options provide a wide range of complexity, privacy, and control:

- Public LLM solutions with limited privacy protection.

- Premium LLM solutions with some degree of privacy protection.

- Custom pre-trained, LLMs that allow for greater control of privacy, grounding, and other settings.

- Professionally developed solutions with a more complex design and may involve training your own AI models.

Where does Security Copilot fall on this spectrum as a comparison? Security Copilot falls somewhere between a premium LLM and custom LLM solution. You might think of Security Copilot as a software as a service (SaaS) version of a security LLM. The service is private and grounded in your data. The service can also be further customized by adding additional data using plugins and custom promptbooks.

Desires Outcomes

Using Public LLMs

Publicly available LLMs like ChatGPT and Microsoft Copilot can perform many of the same tasks as Security Copilot, such as language translation, document summarization, code explanation, query creation, and providing common recovery steps. With additional tools and websites, you can decode scripts and look up indicators of compromise. Employee expertise can help significantly to fill any gaps.

SOC teams have historically operated using internal findings combined with external websites, services, scripts, and employee expertise to resolve security incidents.

However, relying on free or low-cost LLM solutions may involve various limits like the number of API calls per hour or the number of tokens per prompt. These services often provide ample resources for individual users but quickly encounter limits when used frequently. In some cases, purchasing additional capacity may be an option.

Public LLMs do not typically support extensive grounding, though prompt-based grounding is an option. The risks here are obvious: exposing internal information to external websites and services, relying on inconsistent resolution practices, and over-reliance on human expertise. This is comparable to relying on a free accounting Q&A forum to help you do your taxes; you might get good answers, but you need to limit what you share.

To mitigate the risks of using Public LLMs, consider the following measures:

- Create internal guidance on the use of external services for incident investigations.

- Document approved investigation and recovery steps for common security incidents.

- Educate security employees on allowed and disallowed practices.

- Block access to unsanctioned external services that might be used during an investigation.

- Monitor and filter activity related to approved incident investigation sources.

- Automate requests to public LLMs for better control and monitoring of shared information.

Practical Examples:

- Document the common investigation and recovery steps for the top 5-10 security incidents.

- List all approved websites, forums, scripts, queries, and sources.

- Provide a mechanism to request approval of new security resources as they are discovered.

- Describe the types of information that can and cannot be shared in a query, prompt, or post.

- Block disallowed websites and tools, especially untrusted LLMs.

- Monitor activity to trusted LLMs and threat intelligence sources and forums.

- Use Microsoft Defender for Cloud Apps and Microsoft Purview to control public LLM usage.

- Use Microsoft Sentinel Playbooks (Azure Logic Apps) or Microsoft Power Automate to enrich incidents with sanitized requests to public LLMs.

For example, Microsoft Sentinel playbooks can be used to automatically promt a public LLM for additional information. The prompt can be sanitized to remove confidential information from the request, and the response can then be added to the incident as a comment or task. Similar practices are commonly used to enrich incidents using public resources like VirusTotal. However, responses in this case are limited to general information like common recovery steps.

Public LLMs and security resources can be an excellent source of information during security incident response, with a reasonable level of moderation, using approved sources, and limiting the sharing of sensitive information. Enterprise customers are more likely to prefer more control and privacy.

Using Premium LLMs

One key distinction of a premium LLM is some degree of privacy, often using a publicly available LLM service with added promises not to retain, resell, or use your data for learning. Additionally, premium LLMs offer more controls, monitoring, higher performance, and higher token limits. Examples of premium LLMs include ChatGPT Enterprise and Microsoft’s Security Copilot.

Microsoft describes commercial data protection for eligible Copilot users as follows:

- Prompts and responses are not saved.

- Microsoft has no eyes-on access.

- Chat data is not used to train the underlying large language models.

Using private LLM options does not change the guidance significantly from public LLMs:

- Use private (premium) LLMs: Whenever possible, use premium LLMs to ask frequent questions while performing research related to a security incident.

- Provide guidance and monitoring: Ensure that SOC teams have clear guidelines and monitoring to maintain compliance.

- Store organizational documentation: Keep security response guidance and procedures accessible to all SOC employees (e.g., SharePoint, OneDrive) for Copilot prompts.

Private LLMs may also include options to be grounded using limited datasets. Microsoft’s Copilot Pro, for example, can access user data and operate as the user, summarizing emails, Teams meetings, and more. It can help draft communications, summarize related meetings, and assist with security incident research.

Sharing security response documentation in a central location like SharePoint can provide useful grounding source for Copilot Pro. For instance, you could prompt Copilot Pro to summarize approved malware recovery steps from your “Approved Security Response” documentation.

In this example, SOC teams can be directed to use private LLMs like Copilot Pro and ChatGPT Enterprise for manual and automated prompts. These tools will be in a separate browser or app and will not be grounded on your own security data. Though significant security related assistance can be derived from ungrounded and partially-grounded responses.

Using Custom Grounded LLMs

Organizations can choose to use public or private generative AI services like ChatGPT or Microsoft Copilot to enhance security operations. However, these solutions may lack privacy, embedded experience, security-specific training, and security data grounding provided by Security Copilot.

Azure OpenAI is a subset of Azure AI services based on a partnership with OpenAI, making it easier for developers and enterprise customers to host OpenAI GPT services on the Microsoft Azure cloud platform.

Recall that OpenAI offers a GPT service where premium users can create custom, grounded GPTs. These can be shared with free and premium ChatGPT users as a URL or in the GPT marketplace. They have limited grounding capabilities and do not have an API endpoint.

Azure OpenAI takes the concept of custom GPTs to the next level, providing low-code tools for creating generative AI solutions using the ChatGPT models (APIs). It offers a higher degree of privacy, increased limits, more controls, regional hosting, broader grounding options, and flow control. It can be hosted as a web application or API that you control. Using Azure OpenAI is the most robust alternative to Security Copilot given the available options, cost, and level of difficulty.

Update: Azure Open AI now has on option in preview to create GTP Bots in Microsoft Teams.

System Prompts:

LLMs often use a system prompt as a means of control. This is a hidden control statement added to every API request and in the first prompt of a user session. For example, “You are an AI assistant that helps people find information.” The system prompt and any warning messages you might choose to add to the response, count against token usage and token limits. Therefore, a good system prompt is both detailed and concise.

You can even ask Copilot or ChatGPT to help you write a good system prompt and for an estimated token count. For example, the prompt described below generated by ChatGPT costs 86 tokens.

How to use Azure Open AI to create an enterprise security GPT:

- Start by creating a generic Azure OpenAI solution using the OpenAI Studio in Azure.

- Keep the default settings (parameters) for now. Grounding and adjustments can be made later.

- Focus on developing a good system prompt, for example:

You are a security operations assistant. You support security operation analysts during incident responses by providing instructions, answering queries, and looking up IOCs, CVEs, and other related information. You respond in a friendly yet professional tone, tailored to the needs of security analysts. You do not provide answers to unrelated requests. If you do not know the answer to a question, respond by saying, “I do not know the answer to your question.”

Example requests:

“How do I isolate an infected machine?”

“Look up IOC 12345.”

“Find details on CVE-2023-1234.”

- Publish your AI model as a web application. Note that currently there is no option to embed this into your Azure security web portals (it will be used as a separate website and API).

- Test and refine by making minor adjustments to the system prompt and settings.

- Direct your security teams to use the Azure OpenAI website during security investigations and provide reference links to the new application where appropriate (driving the SOC to this LLM).

- Create any related automation using the custom Azure OpenAI API.

- Continue to identify additional data sources for grounding which may include databases, files, websites, and API calls based on monitoring and user feedback.

Grounding Azure OpenAI is simple using the “add data sources” tab. Note that currently, there is no Log Analytics option where much of the Azure security data is stored, but these logs can be easily exported to blob storage. Some options are not yet available in all Azure regions.

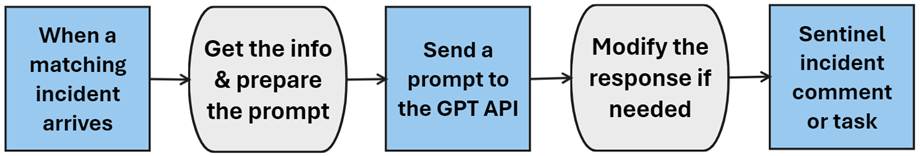

How to use Azure OpenAI with Microsoft Sentinel playbooks:

Once you have a working Azure OpenAI solution, it comes with an API URL and sample web application. This URL can be used to automate chatbot communications. Like sending sanitized prompts to ChatGPT, your Microsoft Sentinel incidents can be enriched by sending requests to your private GPT API. The responses can be recorded as incident comments or tasks automatically.

Prompt Examples:

- Explain how to recover this issue [description] in 5 steps or less.

- Provide a KQL query related to this activity [description]

- Summarize and explain this CVE [CVE].

- Is this IP address [IP Address] suspected to be malicious and why?

Logic App workflow sample:

Using Highly Customized LLMs

It is possible to create your own LLM solution that is more bare bones than Azure OpenAI. This might be due to a lack of trust in OpenAI, prior expertise, or the desire to lower costs for large-scale implementations. You may also require generative AI solutions that are more specific than a prompt-based solution. Azure AI offers a wide variety of AI services, including machine learning (anomaly detection). vision (image), face recognition, OCR (image-to-text), translation, video indexing, bot services, sentiment analysis (moderation), text-to-voice, and text-to-avatar.

Early adopters and leaders in AI may favor custom solutions if they started before curated solutions like Azure OpenAI were publicly available. Organizations will still rely on some cloud service provider for computing power, databases, and web services, likely provided by Azure, AWS, or GCP. Cloud service providers offer an increasing number of AI platform solutions that make the complex task of building LLMs from scratch more feasible.

However, the trust factor may not improve with a more custom approach. Creating a custom solution involves significant complexity and risk, often requiring reliance on service providers like Microsoft. Custom solutions might be necessary for achieving specific goals such as speed, capability, scale, isolation, or cost savings, but they are more difficult to create and maintain. Staffing and training employees to support this effort may be a challenge. The rapid pace of development in LLM services can quickly render custom solutions obsolete, often before development efforts are complete.

If you need to create your own LLM or an AI solution that is more specific, consider Azure AI, especially if you are looking to go beyond the traditional chatbot model. Examples include facial recognition, monitoring images or video, adding accessibility features like text-to-speech, or detecting sentiment patterns in text for comment moderation.

Custom AI modeling is beyond the scope of this document. Refer to the online documentation and training from Microsoft to learn more. What is Azure OpenAI Service? – Azure AI services | Microsoft Learn

Closing Thoughts & Reference Links

Security Copilot is Microsoft’s premier generative AI solution for security operations and response. Embedded into a growing number of Microsoft security portals, Security Copilot is private, readily accessible, low maintenance, tuned for security, and grounded in your data. It is nearly a true set-it-and-forget-it solution, though it is still in its infancy, with features, pricing, and availability expected to evolve over time.

Customers may continue to look for alternative solutions due to various reasons, including cost and trust. Microsoft also offers Copilot, Azure AI, and Azure OpenAI, which can replace many of the features provided by CFS. The trade-offs with alternative solutions include greater cost of ownership, increased complexity, higher privacy risk, lack of an embedded solution, and often not being fully grounded in your own security data.

Consider this decision tree when evaluating generative AI solutions for your SOC:

Microsoft Security Solutions Usage:

- Non-Microsoft Security Solutions: If your organization does not heavily invest in Microsoft security solutions, relies on a security solution provider, or if security is mostly managed by a parent organization, you may not need a dedicated LLM solution for security operations.

- Microsoft Security Solutions: If you heavily rely on Microsoft security solutions managed by your team, your organization will benefit greatly from a security AI strategy using Security Copilot if possible.

Team Size and Capabilities:

- Small Security Team: If you have a small team or struggle to hire and retain security employees, consider a SaaS solution like Security Copilot. The higher cost of Security Copilot may not be significant if you require fewer SCU units. Security Copilot can act as a force multiplier for a smaller, less experienced team.

- Medium-Sized Security Team: If you have a medium-sized team with knowledgeable employees, consider creating an AI strategy based on public, private, or custom (Azure OpenAI) solutions. The choice will depend on your budget, trust in AI providers, and privacy prioritization. For instance, a university might choose a public or private AI-based solution, while a bank or government agency might prefer the privacy and controls of Azure OpenAI. However, Security Copilot should still be evaluated.

- Large Security Team: If you have a large team or are a service provider or parent organization supporting a large international conglomerate, consider Security Copilot, Azure OpenAI, and Azure AI. Larger organizations may have the talent to design and support alternative solutions, but Security Copilot is also attractive for large teams with high turnover.

Availability and Requirements:

Immediate Needs: If your organization cannot wait for Security Copilot to be available in your Azure enclave or if some aspects of Security Copilot are unacceptable, consider all the alternatives discussed. Azure OpenAI is the best choice in terms of complexity, cost, and capabilities when Security Copilot is not an option.

Reference Links:

- Security Copilot Documentation

- Security Copilot Pricing

- Azure AI Services Pricing

- Security Copilot Standalone Portal: https://securitycopilot.microsoft.com/

- Micosoft Azure AI Documentation

- Microsoft Azure OpenAI Documenation

- Microsoft Defender for Threat Intelligence Documentation

- ChatGPT

- Base64 Encode

- Virus Total

- Protect Gen AI using MDCA

- ChatGPT Playbook Example

- Create Custom Copilots with Azure OpenAI

- Video: John Savill – Which AI should you Use?

- Video: John Savill – Microsoft Security Copilot